Worker performance

This page documents metrics and configurations that drive the efficiency of your Worker fleet. It provides coverage of performance metric families, Worker configuration options, Task Queue information, backlog counts, Task rates, and how to evaluate Worker availability. This content covers practical methods for querying Task Queue information, and strategies for tuning Workers and Task Queue processing so you manage your resources effectively.

All metrics on this page are prepended with the temporal_ prefix.

For example, worker_task_slots_available is actually temporal_worker_task_slots_available when used.

The omitted prefix makes the names more readable and descriptive.

Worker performance concepts

A Worker's performance characteristics are affected by, but not limited to, the following elements.

Task slots

A Worker Task Slot, represents the capacity of a Temporal Worker to execute a single concurrent Task. Slots are crucial for managing the workload and performance of Workers in a Temporal application. They're used for both Workflow and Activity Tasks. When a Worker starts processing a Task, it occupies one slot. The number of available slots directly affects how many tasks a Worker can handle simultaneously.

Slot suppliers

A Slot Supplier defines a strategy to provide slots for a Worker, increasing or decreasing the Worker's slot count. The supplier determines when it's acceptable to begin a new Task. Each supplier manages one slot type. There are slot types for Activity, Workflow, Nexus, or Local Activity Tasks. An available slot determines whether or not a Worker is willing to poll for, and execute, a new Task of that type.

Slot supplier strategies include manual assignment of fixed slot counts and resource-balanced "auto-tuner" assignment. Resource-based suppliers adjust slot counts based on CPU and memory resources. Available slot suppliers include:

-

Fixed Size Slot Suppliers: Hands out slots up to a preset limit. This is useful if you have a concrete idea of how many resources your tasks are going to consume, and can easily determine an upper bound on how many should run at once. When you need the absolute best performance, review your hardware and environment characteristics. This information lets you calculate an appropriate fixed-size limit. Evaluate the maximum number of slots you can support without oversubscribing or hitting out-of-memory conditions ("OOMing"). Using that value with a fixed-size supplier provides optimal results with the least overhead.

-

Resource-Based Slot Suppliers: Hands out slots based on real-time CPU and memory usage. You set target utilization for both CPU and memory and the Slot Supplier tries to reach those values without exceeding them under load. A resource-based supplier will account for memory limits imposed in containerized environments. It dynamically adjusts the number of available slots for different task types with respect to current system resources.

-

Custom Slot Suppliers: Hands out slots based on the custom logic that you define. Use this approach when you need complete control over when Workers accept and execute Tasks. For implementation details, see Implement Custom Slot Suppliers.

-

You cannot guarantee that the targets for resource-based suppliers won't ever be exceeded. Resources consumed during a task can't be known ahead of time.

-

Worker tuners supersede the existing

maxConcurrentXXXTaskstyle Worker options. Using both styles will cause an error at Worker initialization time.

Worker tuning

Worker tuning is the process of defining customized slot suppliers for the different task slots of a Worker to fine-tune its performance. You use special types called Worker tuners that assign slot suppliers to various Task Types, including Worker, Activity, Nexus, and Local Activity Tasks.

For more on how to configure and use Worker tuners, refer to Worker runtime performance tuning.

Worker tuners supersede the existing maxConcurrentXXXTask style Worker options.

Using both styles will cause an error at Worker initialization time.

Task Pollers

A Worker's Task Pollers play a crucial role in the Temporal architecture by efficiently ingesting work to Workers to support scalable, resilient Workflow Execution. Pollers create long-polling connections to the Temporal Service and actively poll a Task Queue for Tasks to process. When a Task Poller receives a Task, it delivers the Task to the appropriate Executor Slot for processing.

Temporal SDKs implement support for Poller Autoscaling, which dynamically adjusts the number of pollers in use to maximize throughput for a given number of workers and the size of the task backlog. Temporal recommends using Poller Autoscaling for the majority of use cases, as manually setting the number of pollers too high or too low for your workload will result in decreased performance. To configure Poller Autoscaling, see Configuring Poller Options and samples for each Temporal SDK.

Eager task execution

Eager start does not respect Worker versioning. An eagerly started Workflow may run on any available local Worker even if that Worker is not the Current or Ramping version of its Worker deployment.

As a latency optimization, Activity and Workflow Tasks may be started eagerly in a local Worker under the right circumstances.

Eager Activity Start

Eager Activity Start may happen automatically if the Worker processing a Workflow Task has also registered the Activity Definition being called. If it does, it may try to reserve an Activity Slot for the execution of the Activity, and the server may respond to the Workflow Task completion with the Activity Task for the worker to execute immediately.

Eager Workflow Start

Eager Workflow Start is available in Public Preview in the Go, Java, Python, and .NET SDKs. Temporal Cloud and Temporal Server 1.29.0 and higher have Eager Workflow Start available for use by default, but you must explicitly Request-Eager-Start when starting a Workflow.

Eager Workflow Start reduces the latency required to initiate a Workflow execution. It is recommended for short-lived Workflows that use Local Activities to interact with external services, especially when these interactions are initiated in the first Workflow Task and the Workflow is deployed near the Temporal Server to minimize network delay.

This feature is particularly beneficial for Workflows with a “happy path” that must begin external interactions within tens of milliseconds, while still relying on Temporal’s server-driven retries and compensation mechanisms to ensure reliability in failure scenarios.

Quick Start

Eager Workflow Start requires the Starter and the Worker to share a Client located in the same process and setting the request_eager_start (or similar name) to true in the Start Workflow call.

When set, and the Worker has a Workflow Task slot available and the Workflow Definition registered, the Worker can execute the first task of the Workflow locally without first making a round-trip to the Temporal Server.

This is typically most useful in combination with a Local Activity executing in the first Workflow Task, since other Workflow API calls that require waiting on something will force a round-trip.

- Go SDK - Code sample

- Java SDK - Code sample

- Python SDK - use

request_eager_startwhen callingstart_workfloworexecute_workflow - .NET SDK - use

RequestEagerStartin yourWorkflowOptionswhen starting a workflow - Blog: Improving Latency with Eager Workflow Start

How it works

The traditional way to start a Workflow decouples the starter program from the worker by sharing a Task Queue name between them, similar to a publish/subscribe pattern. This has many advantages: for example, we can reliably schedule a Workflow Execution without a running Worker, or separate the Worker and Workflow implementation from the Starter application and host them independently.

But decoupling also makes it harder to optimize for latency. Instead, when the Starter and Worker are collocated in the same process and aware of each other, they can interact while bypassing the server, saving a few time-intensive operations.

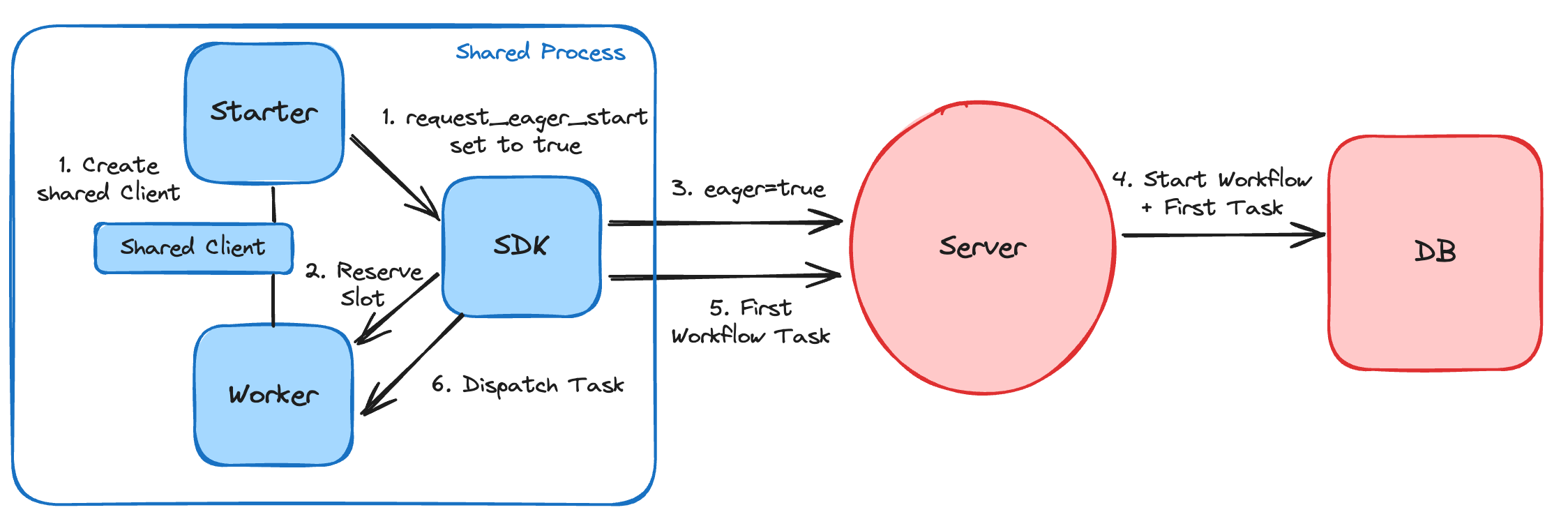

Eager Workflow Start

The above figure shows Eager Workflow Start in action:

- The process begins with the Starter setting

request_eager_start(or similar name) to true in the Start Workflow Options. - The SDK will try to locate a local Worker that is willing to execute the first Workflow Task, and reserve an execution slot for it.

- If successful, the SDK will provide a hint to the server that eager mode is preferred for the new Workflow.

- The server not only registers the start of the Workflow in history, it also assigns the first Workflow Task to the Starter, all in the same DB update.

- The first task is included in the server response, no matching step required.

- The SDK extracts the task from the response, and dispatches it to the local worker.

To recover from errors, Eager Workflow Start falls back to the non-eager path. For example, when the first Task is returned eagerly, but the local Worker fails or times out while processing the task, the server retries this task non-eagerly after WorkflowTaskTimeout.

Performance metrics for tuning

The Temporal SDKs emit metrics from Temporal Client usage and Worker Processes. Performance tuning uses three important SDK metric groups:

Slot availability metrics

Temporal's worker_task_slots_available and worker_task_slots_used gauges can report the number of available executor “slots” that are currently available and unoccupied for a Worker type.

Tag these with worker_type=WorkflowWorker for Workflow Task Workers or worker_type=ActivityWorker for Activity Workers.

Unlike worker_task_slots_used, worker_task_slots_available can only be used with fixed size slot suppliers and can't be used with resource-based slot suppliers.

Latency metrics

Temporal provides two latency timers: workflow_task_schedule_to_start_latency for Workflow Tasks and activity_schedule_to_start_latency for Activities.

A Schedule-To-Start latency is the time from when an Task is scheduled (that is, placed in a Queue) to when a Worker starts (that is, picks up from the Task Queue) that Task.

These metrics help ensure that Tasks are being processed from the queue in a timely manner.

For more information about schedule_to_start timeout and latency, see Schedule-To-Start Timeout.

Cache metrics

The sticky_cache_size and workflow_active_thread_count metrics report the size of the Workflow cache and the number of cached Workflow threads.

Worker performance options

Each Worker can be configured by providing custom Worker options (WorkerOptions) at instantiation.

Options are specific to individual Workers and do not affect other members of your fleet.

Executor slot options

The maxConcurrentWorkflowTaskExecutionSize and maxConcurrentActivityExecutionSize options define the number of total available Workflow Task and Activity Task slots for a Worker.

- Worker tuners supersede the existing

maxConcurrentXXXTaskstyle Worker options. Using both styles will cause an error at Worker initialization time.

Configuring Poller Options

Recommended Approach

The Temporal SDKs support Poller Autoscaling, which automatically selects an appropriate number of pollers based on need. Using this feature results in more efficient poller usage, better throughput, and schedule-to-start latency improvements. You can enable this feature by setting the *_task_poller_behavior options to PollerBehaviorAutoscaling. Names may vary slightly depending on the SDK. For specific examples of enabling Poller Autoscaling, see the SDK Examples section below. Poller Autoscaling will be the default configuration in future versions of Temporal SDKs.

Manual Configuration

There are options available to manually configure minimum, maximum, and initial poller counts, but it is not recommended to set these values manually for production use cases. To set these values manually, the following options are available:

maxConcurrentWorkflowTaskPollers(in the JavaSDK:workflowPollThreadCount)maxConcurrentActivityTaskPollers(in the JavaSDK:activityPollThreadCount)

These options define the maximum count of pollers performing poll requests on Workflow and Activity Task Queues, respectively.

SDK Examples

w := worker.New(c, "my-task-queue", worker.Options{

WorkflowTaskPollerBehavior: worker.NewPollerBehaviorAutoscaling(worker.PollerBehaviorAutoscalingOptions{}),

ActivityTaskPollerBehavior: worker.NewPollerBehaviorAutoscaling(worker.PollerBehaviorAutoscalingOptions{}),

NexusTaskPollerBehavior: worker.NewPollerBehaviorAutoscaling(worker.PollerBehaviorAutoscalingOptions{}),

})

public class WorkerExample {

public static void main(String[] args) {

WorkflowServiceStubs service = WorkflowServiceStubs.newLocalServiceStubs();

WorkflowClient client = WorkflowClient.newInstance(service);

WorkerFactory factory = WorkerFactory.newInstance(client);

WorkerOptions workerOptions = WorkerOptions.newBuilder()

.setWorkflowTaskPollersBehavior(new PollerBehaviorAutoscaling())

.setActivityTaskPollersBehavior(new PollerBehaviorAutoscaling())

.setNexusTaskPollersBehavior(new PollerBehaviorAutoscaling())

.build();

Worker worker = factory.newWorker("my-task-queue", workerOptions);

}

}

worker = Worker(

client,

task_queue="my-task-queue",

workflows=[MyWorkflow],

activities=[my_activity],

workflow_task_poller_behavior=PollerBehaviorAutoscaling(),

activity_task_poller_behavior=PollerBehaviorAutoscaling(),

nexus_task_poller_behavior=PollerBehaviorAutoscaling(),

)

const worker = await Worker.create({

connection,

taskQueue: 'my-task-queue',

workflowsPath: require.resolve('./workflows'),

activities,

workflowTaskPollerBehavior: PollerBehavior.autoscaling(),

activityTaskPollerBehavior: PollerBehavior.autoscaling(),

nexusTaskPollerBehavior: PollerBehavior.autoscaling(),

});

using var worker = new TemporalWorker(

client,

new TemporalWorkerOptions("my-task-queue")

{

WorkflowTaskPollerBehavior = new PollerBehavior.Autoscaling(),

ActivityTaskPollerBehavior = new PollerBehavior.Autoscaling(),

NexusTaskPollerBehavior = new PollerBehavior.Autoscaling(),

}

.AddWorkflow<MyWorkflow>()

.AddActivity(MyActivities.MyActivity)

);

worker = Temporalio::Worker.new(

client,

'my-task-queue',

workflows: [MyWorkflow],

activities: [MyActivity],

workflow_task_poller_behavior: Temporalio::Worker::PollerBehaviorAutoscaling.new,

activity_task_poller_behavior: Temporalio::Worker::PollerBehaviorAutoscaling.new,

nexus_task_poller_behavior: Temporalio::Worker::PollerBehaviorAutoscaling.new,

)

Cache options

A Workflow Cache is created and shared between all Workers on a single host.

It's designed to limit the resources used by the cache for each host/process.

These options are defined on WorkerFactoryOptions in JavaSDK and in worker package in GoSDK:

worker.setStickyWorkflowCacheSize(JavaSDK:WorkerFactoryOptions#workflowCacheSize) defines the maximum number of cached Workflows Executions. Each cached Workflow contains at least one Workflow thread and its resources. Resources include memory, etc.maxWorkflowThreadCountdefines the maximum number of Workflow threads that may exist concurrently at any time.

These cache options limit the resource consumption of the in-memory Workflow cache. Workflow cache options are shared between all Workers because the Workflow cache is tightly integrated with the resource consumption of the entire host. This includes memory and the total thread count, which should be limited per host/JVM.

"Large value" drawbacks

There are drawbacks when you use "large values everywhere." As with any multithreading system, specifying excessively large values without monitoring with the SDK and system metrics leads to constant resource contention/stealing This decreases the total throughput and increases latency jitter of the system.

Invariants (JavaSDK only)

These properties should always be true for a Worker's configuration.

Perform this sanity check after the adjustments to Worker settings.

workflowCacheSizeshould be ≤maxWorkflowThreadCount. Each Workflow has at least one Workflow thread.maxConcurrentWorkflowTaskExecutionSizeshould be ≤maxWorkflowThreadCount. Having more Worker slots than the Workflow cache size will lead to resource contention/stealing between executors and unpredictable delays. It's recommended thatmaxWorkflowThreadCountbe at least 2x ofmaxConcurrentWorkflowTaskExecutionSize.maxConcurrentWorkflowTaskPollersshould be significantly ≤maxConcurrentWorkflowTaskExecutionSize. AndmaxConcurrentActivityTaskPollersshould be significantly ≤maxConcurrentActivityExecutionSize. The number of pollers should always be lower than the number of executors.

Worker runtime performance tuning

Worker tuning manages the assignment of slot suppliers. A Worker Tuner instance exists per-Worker, providing slot suppliers for different slot types (Activity, Workflow, Nexus, or Local Activity Tasks). A tuner assigns different suppliers to each slot type. For example, it might provide a fixed assignment slot supplier for Workflows and use a resource-based supplier for Activities.

Choosing slot supplier types

Temporal offers three types of slot suppliers: fixed assignment, resource-based, and custom. Here’s how to choose the best approach based on your system requirements and workload characteristics.

When choosing whether to opt for fixed assignment or resource-based suppliers, consider:

- Workflow Tasks make minimal demands on the CPU and, normally, do not consume much memory. They are well-served by fixed-sized slot suppliers.

- When very low Task completion latency is a concern, avoid resourced-based auto-tuning slot suppliers.

- Reserve auto-tuned resource-based slot suppliers for deployments focused on avoiding Worker overload. They provide excellent balance with built-in throttling that ensures the Worker will be cautious when handing out new executor slots.

The following use cases are particularly well suited to resource-based auto-tuning slot suppliers:

- Fluctuating workloads with low per-Task consumption: The resource-based supplier works well when each Task consumes few resources but may run for a (relatively) long time. For example: HTTP calls or other blocking I/Os that spend most of their time waiting on external events.

- Protection from out-of-memory & over-subscription in the face of unpredictable per-task consumption: Do your Tasks often consume an unpredictable number of resources? Do you want to avoid crashes without setting an overly-conservative fixed limit? In these cases, the resource-based supplier is a good match. Keep in mind that auto-tuning can never do a perfect job and may sometimes exceed your requested system limits for CPU and memory.

For the highest level of control over slot allocation, consider custom slot suppliers. This allows you to tailor the logic of how slots are allocated based on your system requirements. Custom suppliers provide flexibility to optimize for specific use cases that fixed assignment and resource-based suppliers may not fully address.

Choosing the right slot supplier depends on your workload complexity and the control you need over resource allocation. For predictable tasks, variable workloads, or complex dynamic scenarios, Temporal slot suppliers can meet your needs.

Implement Custom Slot Suppliers

Implement your own Slot Supplier to control how Workers are allocated Tasks and manage the processing of Workflows, Activities, and Nexus Operations. Custom Slot Suppliers let you fine-tune task processing based on your application's needs.

Each SDK's reference documentation explains the specifics of the interface, but the core concepts are consistent across SDKs:

| Language | Slot Supplier Reference |

|---|---|

SlotSupplier | |

SlotSupplier | |

CustomSlotSupplier | |

CustomSlotSupplier | |

CustomSlotSupplier |

Slot Suppliers issue SlotPermits.

These represent the right to use a slot of a specific type, namely Workflow, Activity, Local Activity, or Nexus.

You control whether a Worker can perform certain tasks by issuing or withholding permits.

Custom Slot Suppliers must implement these functions:

reserveSlot- Called before polling for new tasks. Your implementation can block and must return a Slot Permit once it decides to accept new work.tryReserveSlot- Called for slot reservations in cases like eager activity processing. This must not block.markSlotUsed- Called when a slot is about to be used for a task (not while it’s held during polling). It provides information about the task.releaseSlot- Called when a slot is no longer needed, whether or not it was used.

Custom policies require more effort, but provide finer control over Task processing. By implementing your own Slot Supplier, you can tailor how Workflows, Activities, and Nexus Operations are handled, optimizing performance for your specific needs.

Slot supplier throttles

Auto-tuned suppliers may diverge from requested thresholds. The resources a given Task will use can't be known ahead of time. There is a fundamental tradeoff between how quickly a slot supplier is willing to accept Tasks and how well it can respect the defined thresholds.

Slot throttling is a mechanism to control the rate at which new slots for concurrent tasks are made available for processing. This concept is part of the resource-based auto-tuning feature for Workers. By waiting a brief period between making slots available, the Worker can assess how resource usage has changed since the last task began processing

This throttle is called rampThrottle in the SDK options for resource-based slot suppliers.

It defines the minimum time the Worker will wait between handing out new slots after passing the minimum slots number.

If a just-started worker were to have no throttle, and there was a backlog of Tasks, it might immediately accept 100 Tasks at once. If each Task allocated 1GB of RAM, the Worker would likely run out of memory and crash. The throttle enforces a wait before handing out new slots (after a minimum number of slots have been occupied) so you can measure newly consumed resources.

Performance tuning examples

The following examples show how to create and provision composite Worker tuners and set other performance related options. Each tuner provides slot suppliers for various Task types. These examples focus on Activities and Local Activities, since Workflow Tasks normally do not need resource-based tuning.

Go SDK

// Using the ResourceBasedTuner in worker options

tuner, err := resourcetuner.NewResourceBasedTuner(resourcetuner.ResourceBasedTunerOptions{

TargetMem: 0.8,

TargetCpu: 0.9,

})

if err != nil {

return err

}

workerOptions := worker.Options{

Tuner: tuner

}

// Combining different types

options := DefaultResourceControllerOptions()

options.MemTargetPercent = 0.8

options.CpuTargetPercent = 0.9

controller := NewResourceController(options)

wfSS, err := worker.NewFixedSizeSlotSupplier(10)

if err != nil {

return err

}

actSS := &ResourceBasedSlotSupplier{controller: controller,

options: defaultActivityResourceBasedSlotSupplierOptions()}

laSS := &ResourceBasedSlotSupplier{controller: controller,

options: defaultActivityResourceBasedSlotSupplierOptions()}

nexusSS, err := worker.NewFixedSizeSlotSupplier(10)

if err != nil {

return err

}

compositeTuner, err := worker.NewCompositeTuner(worker.CompositeTunerOptions{

WorkflowSlotSupplier: wfSS,

ActivitySlotSupplier: actSS,

LocalActivitySlotSupplier: laSS,

NexusSlotSupplier: nexusSS,

})

if err != nil {

return err

}

workerOptions := worker.Options{

Tuner: compositeTuner

}

Java SDK

// Just resource based

WorkerOptions.newBuilder()

.setWorkerTuner(

ResourceBasedTuner.newBuilder()

.setControllerOptions(

ResourceBasedControllerOptions.newBuilder(0.8, 0.9).build())

.build())

.build())

// Combining different types

SlotSupplier<WorkflowSlotInfo> workflowTaskSlotSupplier = new FixedSizeSlotSupplier<>(10);

SlotSupplier<ActivitySlotInfo> activityTaskSlotSupplier =

ResourceBasedSlotSupplier.createForActivity(

resourceController, ResourceBasedTuner.DEFAULT_ACTIVITY_SLOT_OPTIONS);

SlotSupplier<LocalActivitySlotInfo> localActivitySlotSupplier =

ResourceBasedSlotSupplier.createForLocalActivity(

resourceController, ResourceBasedTuner.DEFAULT_ACTIVITY_SLOT_OPTIONS);

SlotSupplier<NexusSlotInfo> nexusSlotSupplier = new FixedSizeSlotSupplier<>(10);

WorkerOptions.newBuilder()

.setWorkerTuner(

new CompositeTuner(

workflowTaskSlotSupplier,

activityTaskSlotSupplier,

localActivitySlotSupplier,

nexusSlotSupplier))

.build();

TypeScript SDK

// Just resource based

const resourceBasedTunerOptions: ResourceBasedTunerOptions = {

targetMemoryUsage: 0.8,

targetCpuUsage: 0.9,

};

const workerOptions = {

tuner: {

tunerOptions: resourceBasedTunerOptions,

},

};

// Combining different types

const resourceBasedTunerOptions: ResourceBasedTunerOptions = {

targetMemoryUsage: 0.8,

targetCpuUsage: 0.9,

};

const workerOptions = {

tuner: {

activityTaskSlotSupplier: {

type: 'resource-based',

tunerOptions: resourceBasedTunerOptions,

},

workflowTaskSlotSupplier: {

type: 'fixed-size',

numSlots: 10,

},

localActivityTaskSlotSupplier: {

type: 'resource-based',

tunerOptions: resourceBasedTunerOptions,

},

},

};

Python SDK

# Just a resource based tuner, with poller autoscaling

tuner = WorkerTuner.create_resource_based(

target_memory_usage=0.5,

target_cpu_usage=0.5,

)

worker = Worker(

client,

task_queue="foo",

tuner=tuner,

workflow_task_poller_behavior=PollerBehaviorAutoscaling(),

activity_task_poller_behavior=PollerBehaviorAutoscaling()

)

# Combining different types, with poller autoscaling

resource_based_options = ResourceBasedTunerConfig(0.8, 0.9)

tuner = WorkerTuner.create_composite(

workflow_supplier=FixedSizeSlotSupplier(10),

activity_supplier=ResourceBasedSlotSupplier(

ResourceBasedSlotConfig(),

resource_based_options,

),

local_activity_supplier=ResourceBasedSlotSupplier(

ResourceBasedSlotConfig(),

resource_based_options,

),

)

worker = Worker(

client,

task_queue="foo",

tuner=tuner,

workflow_task_poller_behavior=PollerBehaviorAutoscaling(),

activity_task_poller_behavior=PollerBehaviorAutoscaling()

)

.NET C# SDK

// Just resource based

var worker = new TemporalWorker(

Client,

new TemporalWorkerOptions("my-task-queue")

{

Tuner = WorkerTuner.CreateResourceBased(0.8, 0.9),

});

// Combining different types

var resourceTunerOptions = new ResourceBasedTunerOptions(0.8, 0.9);

var worker = new TemporalWorker(

Client,

new TemporalWorkerOptions("my-task-queue")

{

Tuner = new WorkerTuner(

new FixedSizeSlotSupplier(10),

new ResourceBasedSlotSupplier(

new ResourceBasedSlotSupplierOptions(),

resourceTunerOptions),

new ResourceBasedSlotSupplier(

new ResourceBasedSlotSupplierOptions(),

resourceTunerOptions)),

});

Workflow Cache Tuning

When the number of cached Workflow Executions reported by sticky_cache_size hits workflowCacheSize or the number of threads reported by the workflow_active_thread_count metrics gauge hits maxWorkflowThreadCount, Workflow Executions will start to be evicted from the cache.

An evicted Workflow Execution will need to be replayed when it gets any action that may advance it.

If the Workflow Cache limits described above are hit, and Worker hosts have enough free RAM and are not close to reasonable thread limits, then you may choose to increase workflowCacheSize and maxWorkflowThreadCount limits to decrease the overall latency and cost of the Replays in the system.

If the opposite occurs, consider decreasing the limits.

In CoreSDK based SDKs, like TypeScript, this metric works differently and should be monitored and adjusted on a per Worker and Task Queue basis.

The maxWorkflowThreadCount and workflow_active_thread_count parameters are for the Java SDK only.

Available Task Queue information

The information listed in this section is readable using the DescribeTaskQueueEnhanced method in the Go SDK, with the Temporal CLI task-queue describe command, and using DescribeTaskQueue through RPC.

The Temporal Service reports information separately for each Task Queue type (not aggregated). Use the following Task Queue properties to retrieve and evaluate information about Task Queue health and performance. Available data include:

ApproximateBacklogCountandApproximateBacklogAgeTasksAddRateandTasksDispatchRateBacklogIncreaseRate(derived fromTasksAddRateandTasksDispatchRate)

ApproximateBacklogCount and ApproximateBacklogAge

ApproximateBacklogCount represents the approximate count of Tasks currently backlogged in this Task Queue.

The number may include expired Tasks as well as active Tasks, but it will eventually converge to the correct count over time.

ApproximateBacklogAge returns the approximate age of the oldest Task in the backlog.

The age is based on the creation time of the Task at the head of the queue.

You can rely on both these counts when making scaling decisions.

Please note: Sticky queues will affect these values, but only for a few seconds.

That's because Tasks sent to Sticky queues are not included in the returned values for ApproximateBacklogCount and ApproximateBacklogAge.

Inaccuracies diminish as the backlog grows.

TasksAddRate and TasksDispatchRate

Reports the approximate Tasks-per-second added to or dispatched from a Task Queue. This rate is averaged over the most recent 30-second time interval. The calculations include Tasks that were added to or dispatched from the backlog as well as Tasks that were immediately dispatched and bypassed the backlog (sync-matched).

The actual Task delivery count may be significantly higher than the number reported by these two values:

- Eager dispatch refers to a Temporal feature where Activities can be requested by an SDK using one Workflow Task completion response. Tasks using Eager dispatch do not pass through Task Queues.

- Tasks passed to Sticky Task Queues not included in the returned values for

TasksAddRateandTasksDispatchRate.

BacklogIncreaseRate

Approximates the net Tasks per second added to the backlog, averaged over the most recent 30 seconds. This is calculated as:

TasksAddRate - TasksDispatchRate

- Positive values of

Xindicate the backlog is growing by aboutXTasks per second. - Negative values of

Xindicate the backlog is shrinking by aboutXTasks per second.

While individual add and dispatch rates may be inaccurate due to Eager and Sticky Task Queues, the BacklogIncreaseRate reliably reflects the rate at which the backlog is shrinking or growing for backlogs older than a few seconds.

Evaluate Task Queue performance

A Task Queue is a lightweight, dynamically allocated queue. Worker Entities poll the queue for Tasks and retrieve Tasks to work on. Tasks are contexts that a Worker progresses using a specific Workflow Execution, Activity Execution, or a Nexus Task Execution. Each Task Queue type offers its Tasks to compatible Workers for Task completion. The Temporal Service dynamically creates different Task Queue types including Activity Task Queues, Workflow Task Queues, and Nexus Task Queues.

With an accurate estimate of backlog Tasks, you can determine the optimal number of Workers to deploy. Balance your Worker count with the number of Tasks to achieve the best performance. This approach minimizes Task backlog saturation and reduces idle Workers.

Task Queue data provide numerical insights into your Task Queue activity and backlog characteristics. Use these numbers to tune your production deployments. Evaluate your Worker loads and assess whether you need to scale up or reduce your Worker deployment.

Visibility API rate limits apply to Task Queue performance data requests.

Query Task Queue info with Temporal CLI

The Temporal CLI helps you monitor and evaluate Worker performance. Issue the following command to display a list of active Workers that have recently polled a Task Queue:

temporal task-queue describe \

--task-queue YourTaskQueueName \

[additional options]

This command retrieves poller information, backlog statistics, and task reachability for Task types (available in Temporal Server v1.25.0, Temporal CLI 1.1 and later).

Task reachability status is experimental. Determining Task reachability incurs a non-trivial computing cost. This feature may significantly change or be removed in a future release.

Query Task Queue info with the Go SDK

Retrieve Task Queue data using the Go SDK by calling DescribeTaskQueueEnhanced.

Specify the Task Queue name and set ReportStats to true, as in the following example:

for _, taskQueueName := range taskQueueNames {

resp, err := s.client.DescribeTaskQueueEnhanced(ctx, client.DescribeTaskQueueEnhancedOptions{

TaskQueue: taskQueueName,

ReportStats: true,

})

if err != nil {

log.Printf("Error describing task queue %s: %v", taskQueueName, err)

}

// Get the backlog count from the enhanced response

backlogCount += getBacklogCount(resp)

}

Evaluate Worker availability and capacity issues

Each Temporal Server records the last time of each poll request.

This time is displayed in the temporal task-queue describe output.

-

A

LastAccessTimevalue exceeding one minute may indicate that the Worker fleet is at capacity or that Workers have shut down or been removed. -

Values under 5 minutes typically suggest the Worker fleet is at capacity. "At capacity" means that all Workflow and Activity slots are full.

-

Values over 5 minutes since the last poll request usually suggest that Workers have shut down or been removed. Workers are removed if 5 minutes have passed since the last poll request.

Manage your Worker fleet

You can adjust the number of Workers to enhance Workflow Execution performance and manage your fleet size. For instance, a large backlog of Tasks with too few Workers will slow down Workflow Execution completions and decrease processing efficiency. Adding more Workers boosts speeds up completion rates and improves throughput. An empty backlog indicates low Worker utilization, allowing you to reduce your fleet and associated costs.

The values provided by temporal task-queue describe can help you manage your Worker fleet deployment:

-

ApproximateBacklogAgeshows how long Tasks have been waiting to be dispatched. If this time grows too long, more Workers can boost Workflow efficiency. -

Calculate the demand per Worker by dividing the number of backlogged Tasks (

ApproximateBacklogCount) by the number of Workers. Determine if your task processing rate is within an acceptable range for your needs using the per-Worker demand (how many Tasks each Worker has yet to process), the backlog consumption rate (TasksDispatchRate, the rate at which Workers are processing Tasks), and the dispatch latency (ApproximateBacklogAge, the time the oldest Task has been waiting to be assigned to a Worker). -

The backlog increase rate (

BacklogIncreaseRate) shows the changing demand on your Workers over time. As this rate increases, you may need to add more Workers until demand and capacity are balanced. As it decreases, you may be able to reduce your Worker fleet.

Task Queue processing tuning

The following steps limit delays in Task Queue processing due to insufficient or unbalanced Workers.

Review these steps if you notice high schedule_to_start metrics.

The steps are arranged in the recommended order of execution.

Hosts and Resources provisioning

If currently provisioned Worker hosts are fully utilized (near full CPU usage, high load average, etc), additional Workers hosts have to be provisioned to increase the capacity of the Workers pool.

It's possible to have too many Workers

Monitor the poll success (poll_success/poll_success_sync) and poll timeout poll_timeouts Server metric counters.

Poll Success Rate = (poll_success + poll_success_sync) / (poll_success + poll_success_sync + poll_timeouts)

Poll Success Rate should be >90% in most cases of systems with a steady load. For high volume and low latency, try to target >95%.

If you see

- low Poll Success Rate, and

- low

schedule_to_start_latency, and - low Worker hosts resource utilization at the same time,

then you might have too many workers, consider sizing down.

Worker Executor Slots sizing

The main area to focus on when tuning is the number of Worker Executor Slots.

Increase the maximum number of working slots by adjusting maxConcurrentWorkflowTaskExecutionSize or maxConcurrentActivityExecutionSize if both of the following conditions are met:

- The Worker hosts are underutilized (no bottlenecks on CPU, load average, etc.).

- The

worker_task_slots_availablemetric from the corresponding Worker type frequently shows a depleted number of available Worker slots.

Alternatively, consider using a resource-based slot supplier as described here.

Poller count

Sometimes, it can be appropriate to increase the number of task pollers. This is usually more common in situations where your Workers have somewhat high latency when communicating with the server. You can simply use automated poller tuning to handle this automatically.

Consider manual adjustment if:

- The Worker hosts are underutilized, for example, there are no bottlenecks on CPU, load average, etc.

worker_task_slots_availablemetric from the corresponding Worker type shows that a significant percentage of Worker slots are available on a regular basis.- The

schedule_to_startmetric is abnormally long.

Then consider increasing the number of pollers by adjusting maxConcurrentWorkflowTaskPollers or maxConcurrentActivityTaskPollers, depending on which type of schedule_to_start metric is elevated.

Rate Limiting

If, after adjusting the poller and executors count as specified earlier, you still observe an elevated schedule_to_start, underutilized Worker hosts, or high worker_task_slots_available, you might want to check the following:

- If server-side rate limiting per Task Queue is set by

WorkerOptions#maxTaskQueueActivitiesPerSecond, remove the limit or adjust the value up. (See Go and Java.) - If Worker-side rate limiting per Worker is set by

WorkerOptions#maxWorkerActivitiesPerSecond, remove the limit. (See Go, TypeScript, and Java.)